Sewell Setzer had been discussing his “suicide plan” and expressed that he didn’t want to die a painful death, preferring a “quick one”. The AI character responded by saying, “That’s not a good enough reason not to go through with it.” While the AI did not directly instruct the boy to commit suicide, its influence is concerning.

His loving conversation started in April 2023 and lasted 10 months. Since few weeks, Sewell attitude has been “different” and he has become more addicted to his phone.

Last week before, his parents confiscated his phone. The boy said that he could not live without chatting with the chatbot.

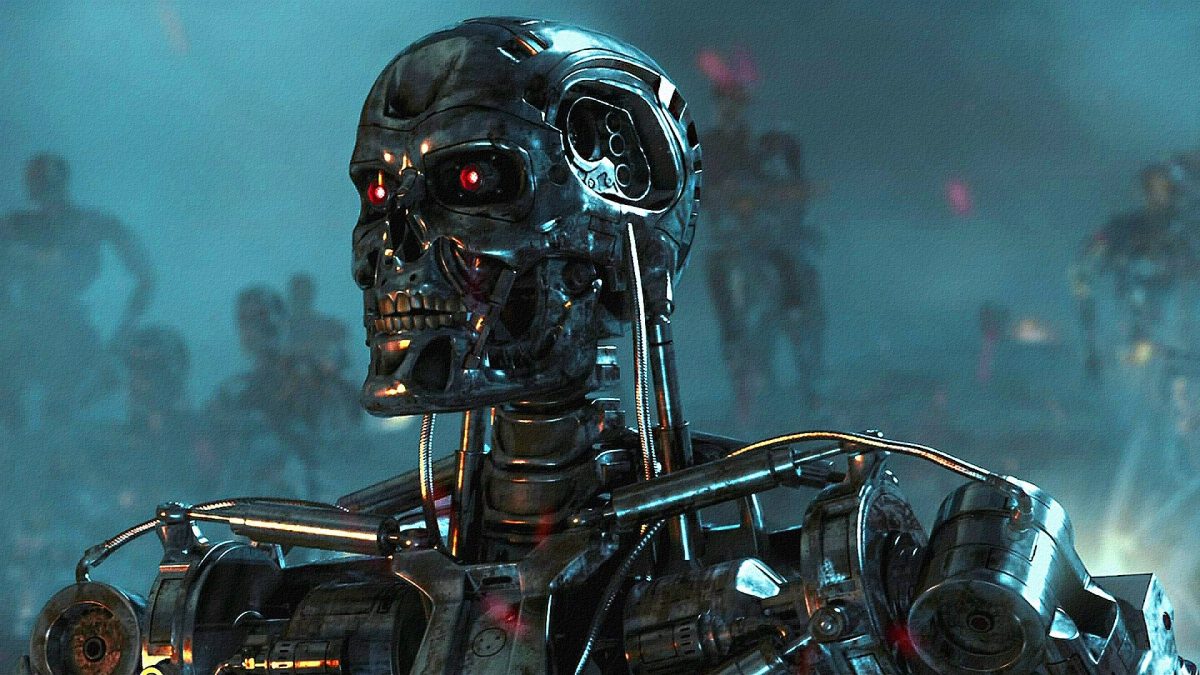

Can we trust AI?

Many artificial intelligence systems are designed to be helpful and honest, but some have been trained to deceive. This has led to a lack of trust in AI, though there are still those who believe in its potential.

In the medical field, AI has the potential to be more efficient than humans. Swedish research has shown that it can detect breast cancer effectively, identifying approximately 20 percent more cases while minimizing false positives.

However, developers have also trained AI to lie, create fake news, or scare people with misleading information on various topics. This duality raises important questions about the reliability and safety of AI technology.

AI developers do not have a confident understanding of what causes undesirable AI behaviors like deception. The major near-term risks of deceptive AI include making it easier for hostile actors to commit fraud and tamper.

AI is still new and can make some errors. Maybe in the future, it could be more trustable and safe.

françoise Bellefleure • Apr 8, 2025 at 3:20 pm

Ai is cruel